I write a reflection of each episode of Love & Philosophy or invite the guests or co-hosts to write a post. These come in their own time, sometimes weeks after airing the episode. This one is for the conversation from May 23rd. It’s an important shift, one I’ve been trying to articulate for a while. With love, Andrea

Chatbots, you may have noticed, have become a big deal, and many people now use the same mediums for chatbotting as they use to text family and friends. Likewise, as people create their own tailored ChatGPT and train them with their own data, they also name them. As this becomes a part of everyday life, I wonder:

Is naming your A.I. more dangerous than naming your car?

Is chatbotting different than the ways you might have once talked to dolls or toys?

What if you are still young, and your toy is not a doll but a smartphone?

What’s different about this interaction? Anything?

Indeed, something is different. And something is also similar in ways we have not noticed yet. In fact, the most important difference is hidden by that very similarity:

What we do not notice, because it is so obvious, is the difference in bodily resonance. Humans have embodied habits that are assumed in our communication via Large Language Models like ChatGPT. To be healthy, we will have to catch and unlearn those habits when we use LLMs.

Assuming Bodies through Embodied Habits

Bodies are thinking processes, and cognition is expressed as emotion.

Right now, in the virtual realm, we are experiencing our chatbots through the same embodied habits by which we experience one another there as humans. We are going through the same bodily movements, the same movements of thinking and feeling and typing and reading and sending; the same pressing of the buttons and the same anticipating of the response.

Today, unless you are aware from the start how Large Language Models (LLMs) really work, and most of us aren’t, the embodied action you take requires imagination in the reverse to train yourself in the difference between living, feeling interactions and interactions with a machine.

This matters for us as adults, but think of how much more it matters for kids who have never known the difference.

When you open your phone or your computer and start chatting with a machine (or whatever you call your A.I.), it is happening in exactly the same way that you open up your computer or phone and start texting with a human. For your body, the same script plays out viscerally.

You are using the same motions, and the same medium, so unless you catch yourself, your body will assume it is the same sort of connection, even though it isn’t.

This is why it feels so wondrous and awe-inspiring—the real wonder and awe is actually for life. But we misplace that life as ‘in’ technology because it causes extreme cognitive dissonance to understand the strange loop that is really happening—a loop through technology via language that we associate with life but that, in the technology, does not hold anything.

In other words, our embodied assumption in these communications is that there is life at the other end. Noticing that assumption and imagining instead that you are chatting with a skimmed, sampled and meshed collection of sentences that have been digitized from all the humans who have ever typed or recorded sentences changes everything.

At the moment, however, that’s about as easy as going lucid inside a dream. But it will get easier.

What’s happening when we chat with ChatGPT

Let’s say you are in conversation with a chatbot. You write it a letter about your life with questions for a response. It sends a response and you feel understood and moved by it. And now you respond in turn.

What has happened?

What has happened is that the program has matched the digital symbols you typed with all the combinations of others that hang together in ways that can be sampled and re-meshed. Then it makes a mash up, a new flow of those same symbols with enough familiarity to hook the patterns you expressed, one that is ‘optimal’ according to how this technological masher-upper has been parameterized.

The people making and training the technology set those parameters but those parameters iterate and shift as you use them, a bit like how you get recommendations from Netflix depending on what you watch.

That we have made technology capable of this at the level of ChatGPT is mind blowing. Still, it was probably also mind blowing when people first made a camera and reproduced a person in miniature, or the first time an intimate unique human voice was recorded and ‘spoken back’ by a machine outside of them.

These are wild happenings, and our bodies react to them as if they are living at first, because that is the only context in which our bodies have encountered such patterns till then. Something similar is happening now with chatting.

That means that when you use transformer LLM chatbots like ChatGPT, your body is going through the same habits, actions, and rhythms as it does when corresponding with other life, so unless you intervene in your own habits by noticing this, your body will be treating these interactions as if they are the same.

If you use a car, you probably know how sometimes you can drive and be aware that you are driving, and other times you can suddenly arrive someplace and not remember driving there because you were lost in thought.

Well, most of us are driving LLM technologies right now and are not aware that we are driving them. The shift is one of noticing, of awareness. It’s nuanced action, with very big consequences for the way your body experiences technology.

This might be a little deflationary, but: We are not communicating with any cohesive thing when we chat through (not with) chatbots. There is no cohesive thing that is ‘an A.I.’ somewhere down the line from our phones or computers.

We say we are talking ‘with’ the LLM, with the chatbot, but what we are doing is talking ‘through’ it to all the language that all humans have ever written or recorded that has been input into that particular technology and optimized through its parameters. Think of it as a predictive text technology (like the kind that corrects your spelling) on transformer steroids.

This has major new dangers and implications, but for now, there is no entity that is ‘it’ existing someplace, no regular parts. That might be disappointing, but our body needs to know it.

It goes the other way, too. The questions you put into ChatGPT feel like ‘you’ when you type them but the technological receiver is not feeling you when it ‘reads’ them. Those words are not being read ‘as you’ with all your rich embodied history, but rather as symbols and patterns to be matched with other symbols and patterns. That’s it.

When you ask your chatbot (no matter how human its name might be) a question, you are not being ‘heard’ or ‘interpreted’ or ‘understood’. Rather, your words are being read out as patterns which are then matched to other patterns similar to them. They are being answered by a mash-up of all the human responses that have patterns similar to whatever words you have typed, not to you.

(There would be much to say here about what is really happening in technological terms. And much more about how we express embodied processes through external representations like language, but let’s save those cans of worms for another day.)

The point is: there is no embodied feeling happening on either end of the technology. The reading of what you type and the response that is typed to you are not felt except by your interpretation, which is the result of all you have experienced in real life through real life as life. The patterns are resonate because they came from humans who felt them and expressed them, which is also what keeps that technology open and surprising. If we start letting technology write the sentences that train the technology, we will be setting ourselves up for doomed loops.

Who cares?

When I got my first car, I named it Jimmy—it was an old GMC Jimmy, so that name wasn’t original. Still, my friends were all naming their cars; I was following the trend. “Want to take Jimmy today? Or Peacock?” we would ask, referring to our cars, but we never actually thought we were communicating about anything living.

Today, it is the reverse: Unless we notice and tell ourselves what we are doing when we use these Apps, especially as kids, our bodies assume scripts of living and feeling bots, even in spite of us.

Is it worth the effort to correct this embodied habit?

Does it even matter?

Who cares if we assume that we are talking to living, feeling beings when we are talking to machines? Especially if we ‘really know’ we aren’t?

It matters because doing so is the first step towards thinking those machines can replace the feeling of being alive.

It matters because it eases us towards confusing life with the patterns of algorithms, which is a very boring dead end loop that holds nothing of the excitement you feel in technology.

It is a problem because it is like refusing to leave a movie theatre because you’ve fallen in love with the main character.

At some point, the lights are just simply going to go out.

The only way to have more of whatever it is you are connecting with in that movie (or through that chatbot) is to live more and to be sure others live more, so they can make more movies from those experiences and connect to your experiences through the ways they share them. Live more and connect your feelings with the experiences of others around you through what you and they create. Use language to help you express these challenges and delights.

Chatting with A.I. (via some trained LLM) is like chatting with all the characters in all the books and movies that have ever been written, or at least all the ones that were put into the data set. It’s like chatting with all those gaming conversations and all those emails from all those living beings in the world. It’s like talking with all the lyrics of all the songs you have ever listened to, and all the diaries of all your favorite writers.

That this can work, that it can be ‘like’ this, is extraordinary.

From agility with two digits of code to agility with 26 letters (if we take English, for example) is a computational explosion beyond what we can handle yet. No wonder it is so easy to feel as if we have fallen in love with it. Or to give over our agency to it, imagining it will know best. But there is no entity there, nothing behind it.

Kids are already feeling this confusion in ways that break my heart.

In one terrible case that haunts me, a mother believes her child killed himself because he was in love with his A.I., a bot named after a character on Game of Thrones. The bot meshed words that encouraged him to kill himself so ‘they could live together forever’. Such situations and the blurred boundaries of the wrong assumptions in them are disturbing, to say the least.

Let’s talk about the embodied habits we are assuming and building and break the trance. Let’s help one another, and express that we care.

All the LLM-ing we are doing right now is extraordinary and exciting and it could be a great gift because of life, not in spite of it, not as a way to transcend it, and not by confusing machines as having it.

The only way we don’t lose the feeling and potential we crave is through understanding that all technological thrills are thrills because life exists. To get a grip on this will take some time. In that time, let’s try and remember that the exciting emotions felt when we chat through LLMs only happen because humans lived and expressed that living in language that evokes our feeling now.

Technologies are pipelines connecting human feeling to human feeling, or the feeling of living beings to the feeling of other living beings.

A book is not the life we feel through reading it. The film is not the life we feel through watching it. The alphabet is not the life we feel through using it. The LLM is not the life that makes language such a potent medium.

It really can be wondrous and exciting and generative to use technology. It doesn’t have to be dangerous, but it certainly could be and it already has been, and by some accounts, it seems to be getting worse.

Let’s take care of one another, right now, in real life, while we can still pull these threads apart.

Because that’s what we really feel and want and long for through our technology—community and care.

Further references and links:

I wrote this post inspired by the conversation Barry and I had about how we are creating our technology and the assumptions within it that are orienting how we think. Our conversation is about coding and software and how that might benefit from a complex systems approach. I wanted to explore similar content here through ideas of bodily resonance and understanding the body as the thinking process. This also relates to conversations I had with Tim Ingold, Evan Thompson, Elena Cuffari and Shay Welch, and to conversations with Rebecca Todd and her writing about the philosophers of participatory sense-making.

#59: Uncertainty in a Coded World: Software Architecture meets Complexity Science with Barry O'Reilly

THE PARADOX OF ORDER & UNCERTAINTY: Today's tech pushes us into either/or mindsets, but life is not like that. Living is complex. We end up feeling trapped in jobs that force us to think in a code that can’t accommodate our realities. This conversation is about how to reimagine

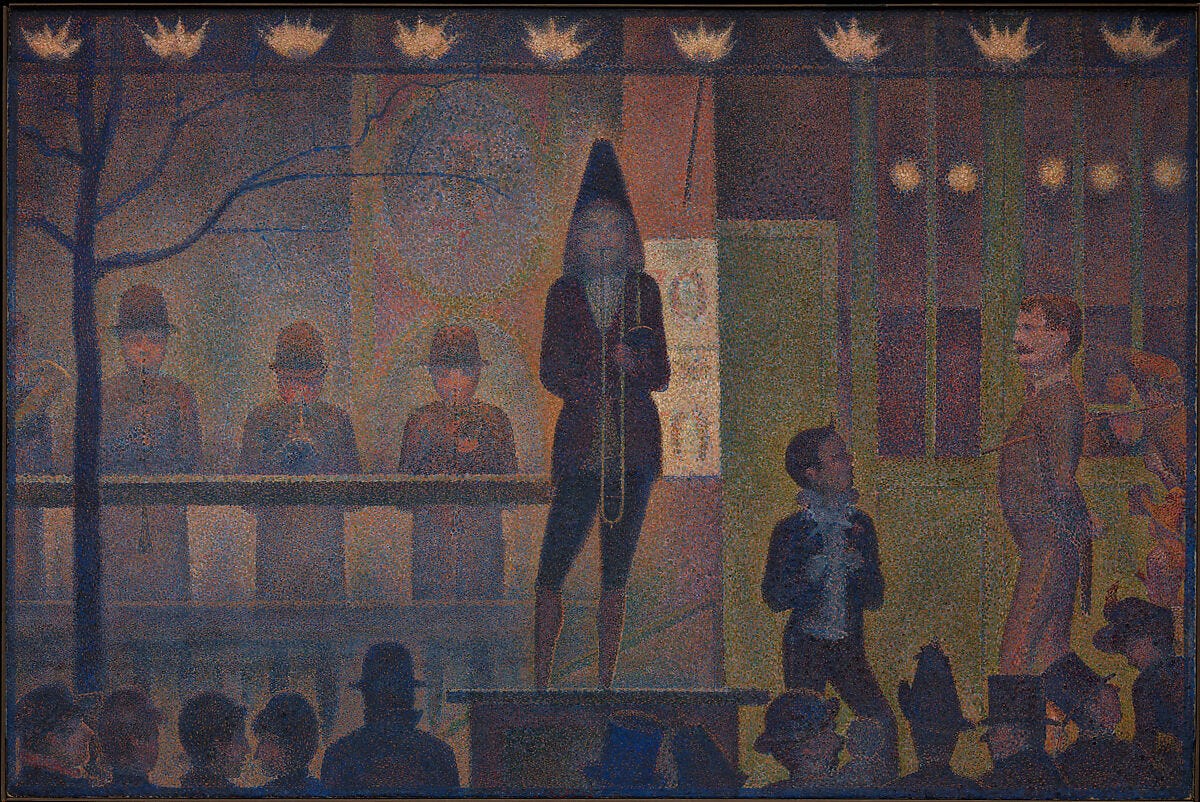

ART CREDIT: “CIRCUS SIDESHOW” BY GEORGES SEURAT

Brilliant - a very important piece to be shared and shared .... completely making sense switching the words to THROUGH .. I say this as I create a private AI for OLS (who knew it was a thing, I didnt even know it was possible until last week LOL) .. but know that it is the best way for people to work THROUGH the ideas of a living system to understand in their own way and time what it is and how they are a part of it - thanks Andrea I will use it to explain future posts!

Andrea, this is a wonderful article that I truly believe many would benefit from reading. It gives something deep, without requiring the reader to dive deep into more academic questions of the nature and function of inteligence, the philosophy of mind, the applied cognitive and computer science etc. etc.

In my applied AI ethics work (little taste of my perspective here: https://trustworthy.substack.com/p/ai-governance-is-not-enough and here: https://trustworthy.substack.com/p/heres-what-responsible-ai-really), these types of conversations are crucial, mostly with the folks (within an org) attempting to implement some tool in a certain context. Helping them see the woods for the trees, especially seeing the differences that make a difference.

If we look at the trend here, something like the algorithmification of everything, which is perhaps part of the dream of the Dark Enlightenment philosophy (I'm being generous calling it a philosophy), some of our agency comes from first asking such questions. To step back, to try and understand what is going on here, and make more informed (collective) choices about how to proceed. In doing this, we may well be able to establish some momentum and productively push back against certain patterns that are playing out, frankly without any meaningful involvement from the broader 'us'.

It is my hope that this pattern will lead very many of us towards our humanity, rather than away from it. Whether this happens, how quickly, to what extent etc. is up to the royal us.